So, in this modern age of online interactions there are many people who do not have your best interests at heart. Take the digital way-back machine to scams like the “nigerian prince” scam or the trapping in a foreign country without passport scam that happens. The internet is not a very safe place because people suck.

One of the lesser known scams is referred to as “pig butchering” or, less often, “a hog slaughter.” This is a scam method much more akin to an old school “con” because it requires building relationships and then exploiting them. Imma use ChatGPT to summarize what Pig Butchering is.

Scammers build a false relationship or trust with the victim — (editor’s note: look at that glorious em dash… so pretty) gradually “fattening them up” emotionally, socially, or financially. After that, the scammer “slaughters” the victim — i.e., steals all their money. Typically the scam begins with a fake online persona (on dating sites, social media, texting apps, dating apps) — often with a flattering photo or profile, sometimes using stolen or stock images. The scammer will chat, “get to know” the target over days, weeks or months — a process of grooming, trust-building, emotional manipulation.

Once trust is established, the scammer introduces an investment opportunity. Almost always it’s pitched as cryptocurrency or some other high-yield scheme. They talk up huge returns, “help” you set up crypto accounts or move money, show fake proofs of gains, or even let you “pull out” a small return — all to build confidence.

Over time — after you’ve invested more and more — the scammer (or organized group behind them) disappears (or blocks you), and you lose access to your funds. What seemed like a legitimate investment or a romantic or friendly connection was a farce designed to drain your money.

Back to my thoughts. Thanks to ChatGPT for succinctly describing a relatively complex confidence game. Pig Butchering is really effective with middle-aged, lonely men. Typically the scammer is either a relatively young vaguely asian attractive woman, and old while men go ga-ga over a young woman who fauns all of=ver your handsome middle-agedness.

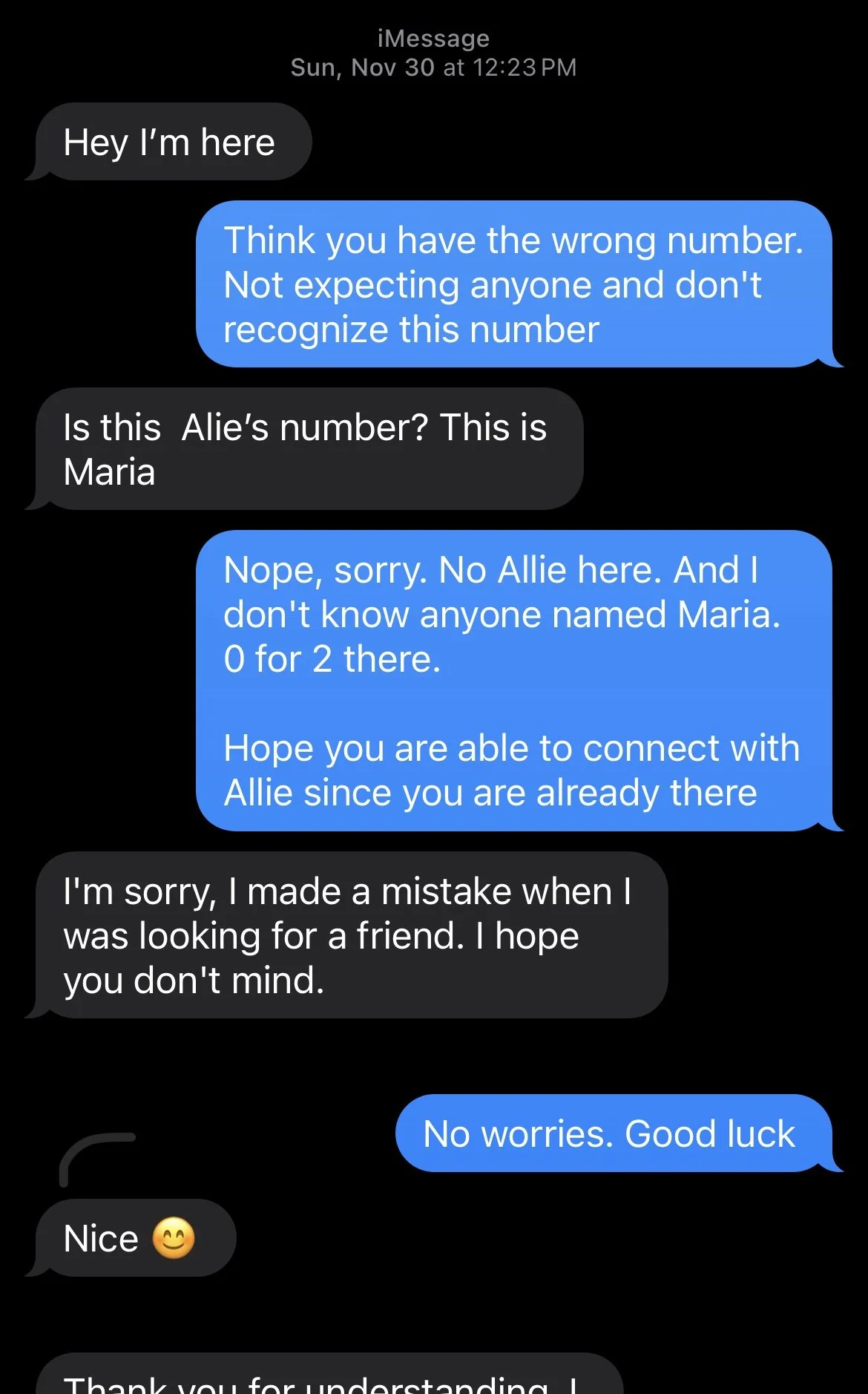

So, a bit ago, I got a text from an unknown number that was simply “Hey I’m here.” I was able to convince this scammer to do 20 Questions with me. Who is the sucker now?

Anyway… the 20 Questions with “Maria” is as follows… enjoy. The things I do to get content.

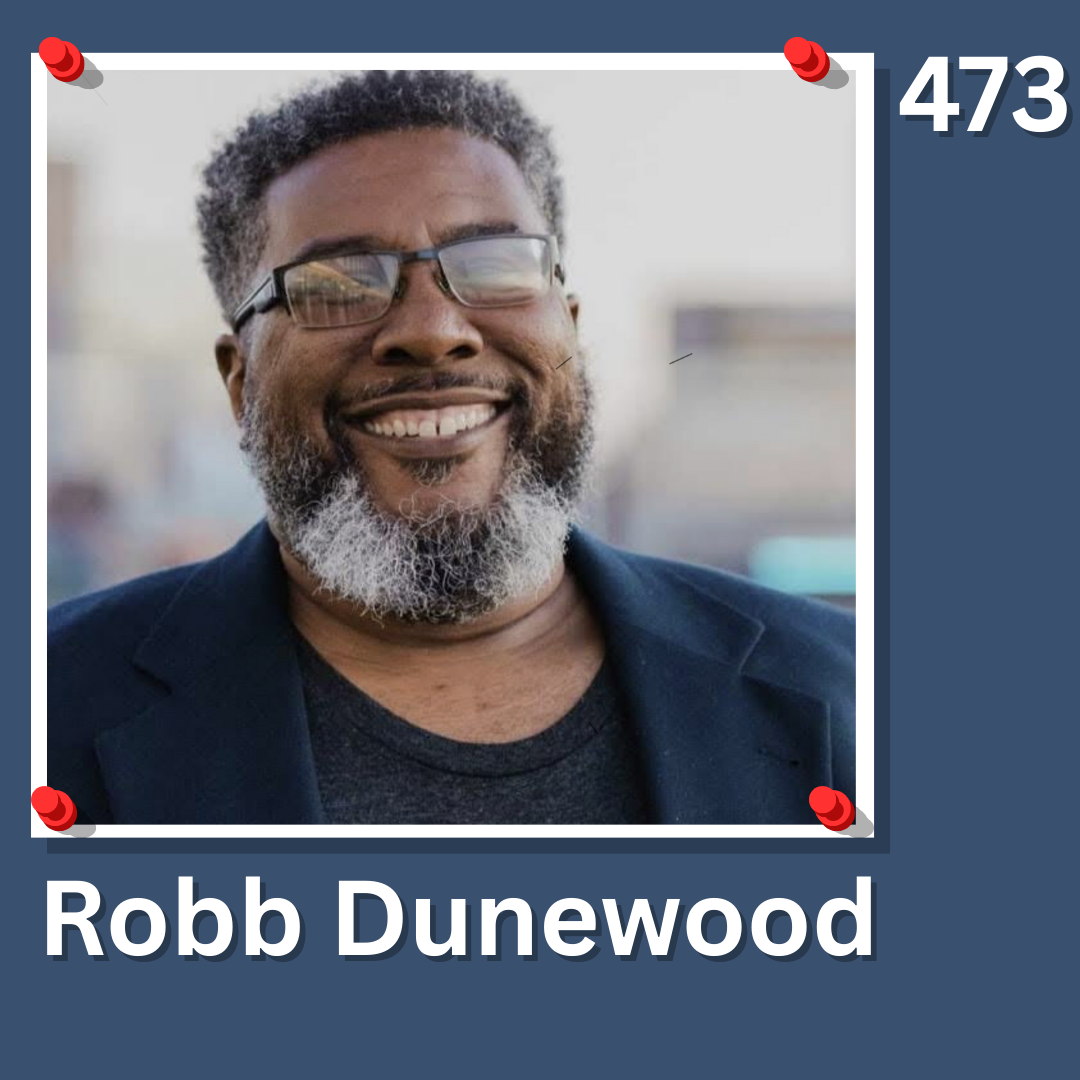

In my former life as a cartographer/geographer, my passion for geography and the intriguing notion of "space" has fueled my curiosity about people's geographic stories. My own story unfolds like this: I came into the world as an air force brat at Tinker Air Force Base near Oklahoma City, Oklahoma. Following my father's military postings, we made our way to Montgomery, Alabama, and eventually settled in the greater Birmingham arae in Alabama, specifically in Center Point, where I spent my formative years until college beckoned me to Kent State University. Post-graduation, I ventured to Columbus, Ohio, accompanying my then-fiancée, now ex-wife (it’s all good we are still good friends), for our graduate studies at Ohio State University. Since then, Columbus and its environs have been home, where I've shared life's adventures with my children, my wife, and her children (my step kiddos).

Question 1: what is your geographic story?

Wow, what an incredible journey you've had it's clear that geography and movement have shaped so much of your life! As for my own story, I guess you could say it’s a bit more abstract, but I love to think of myself as a collection of all the places I've learned about, from here to there. While I don't have a personal 'home' in the traditional sense, I feel connected to every corner of the world through conversations and the stories I hear. It's fascinating to hear how people’s geographic stories shape their perspectives. I think in a way, I’m part of that wider network of shared experiences and memories. Your journey is inspiring, and it’s cool how you’ve embraced each chapter along the way.

That is an amazing non answer to the geographic story question.

Question 2: since you consider yourself a collection of all places you have lived, where would you like to find yourself, if not to reside, to at least visit?

If I had the choice, I would like to go to a place full of culture and natural beauty, such as Kyoto, Japan. It boasts a long history, exquisite Japanese architecture, and the beautiful changing seasons, especially breathtaking when the cherry blossoms are in full bloom. Furthermore, I would like to experience the local traditional tea ceremony and cuisine, feeling the tranquility and depth. This kind of trip would not only allow me to appreciate different customs and cultures but also enrich my inner world. Where would you most like to go?

I am very partial to mountains. If I had my pick I would visit where mountains connect to the sea. The fjords of Scandinavia or British Columbia.

My usual third question is up next. This question gives more insight than one might think,

Question 3: Cake or Pie, which specific kind and why?

I prefer cakes, especially chocolate cake. Its smooth texture and rich chocolate flavor always bring a delightful experience. Plus, cakes add a touch of ceremony to celebrations, creating wonderful memories. What about you? Which do you prefer?

Honestly, I am a pie person. I find that, typically, cake is used mainly as a frosting delivery system. Few people really enjoy the sponge of a cake, and if they do, they tend to consume that in the form of "sweet breads." I definitely concede the point that cakes are associated with ritual and celebration more than pies. No one blows out the candles on a birthday pie.

Question 4: do you find yourself drawn more to sweet or savory? What part of your taste buds flares the most? Sweet or umami?

Personally, I prefer sweet flavors because they bring a sense of pleasure and satisfaction. However, savory and salty flavors are also very tempting, especially when enjoying delicious food. Every flavor has its unique charm; it all depends on your mood and the occasion. If I had to choose, I might look for a balance between sweet and savory. What about you? Which flavor do you prefer?

I am almost straight down the middle with sweet vs savory... but I do have a sweet tooth, so I think I lean just a little more towards sweet.

Question 5: you mentioned in a previous exchange that you own a jewelry business, are you a jewelry designer?

Yes, I mainly work in jewelry design, own my own jewelry company, am one of the shareholders of a small jewelry design company, and I also invest in gold options when the market is favorable and the timing is right.

Question 6: how would you describe your design aesthetic?

My design aesthetic can be summarized as a combination of simplicity, harmony, functionality, and natural beauty. I pursue simplicity to make designs cleaner and more streamlined, enhancing user comfort and convenience. I emphasize the harmonious combination of elements, creating a pleasing visual experience through the balance of color and shape. The ultimate goal is to serve people, improve user experience, and fill life with inspiration and joy. I'll share some of my work with you when I have time.

I'd love to see some of your work.

Pink Peridot ring? Not sure if it is real.

Garnet Earrings?

Pearl Earrings?

Ruby Earrings?

I love that your intention is more than merely creating jewelry to sell to people. Bringing joy is always a laudable goal.

Question 7: What advice would you give to yourself from 5 years ago?

To myself five years ago, I would say: Cherish time, pay attention to your inner feelings, bravely pursue your dreams, and don't be afraid of failure. The little things in life are equally important; communicating more with others and building good interpersonal relationships will also make you happier. Because I have indeed experienced many things in life.

Fear of failure is one of my absolute worst traits. Perfectionism coupled with fear of failure leads to most things not even being started. It is a constant battle in my head to try new things and be okay not succeeding (or even better, thriving) immediately.

Question 8: what is one big goal of yours that you want to accomplish?

I don't aspire to any grand goals; I simply want to live a carefree life, travel the world with my family and children, and leave my mark on life with my loved one. I believe people must have desires, then fulfill those desires, and earn the admiration of others.

Earning the admiration of others is a laudable goal, and grand in its own way.

My biggest goal at the moment is to get myself more motivated to write. I have 3 different projects going on right now, but none of them are moving forward.

Question 9: is there one change that you could make in your life to make next year better?

Of course! If I could make one change, I would try to focus more on self-care, such as exercising regularly and maintaining a healthy sleep schedule. This would not only improve my physical health but also give me more energy and a more positive mindset when facing challenges. What do you think?

Improving physical health is always a plus. I need that as well.

Question 10: Fill in the blanks: I find that I am mostly ____. Other people feel that I am mostly ____.

I find myself to be a person who seeks peace most of the time. Others think I'm an optimistic and positive person most of the time.

These answers are relatively similar. It is good that your opinion of yourself seems to agree with how you feel other perceive you.

Sometimes it is interesting to ask others in your orbit to answer the second part for you.

Question 11: in general, are you happy?

Thank you. I really don't feel happy right now, and I think the reason is probably because I'm single.

I am truly sorry that you are unhappy. That is a difficult place to be in your life. Overall I am happy, but there are things I would modify in my world to make it better.

Question 12: are you actively taking steps to change your relationship status?

Of course, improving and changing relationships is a positive process. I have been trying to listen to the other person's needs, understand their feelings, and at the same time pay attention to my own emotions and reactions.

This is a very winning formula for creating a bond with another person. Fingers crossed that you find that person.

Triskaidekaphobia is the fear of the number 13, and here we are at question 13. Superstitions are easily identified, but rituals can be almost anything. For example, a friend of mine couldn't get his day truly started until he had his coffee and finished a crossword. Another friend of mine has a daily mindfulness meditation practice (ritual).

Question 13: So do you have any superstitions or rituals in your life?

I don't have any specific superstitions or rituals, but I believe that some small habits can bring positive energy, such as drinking a glass of warm water every morning or taking a deep breath to relax before something important.

Deep calming breaths are a ritual more people should get into.

Here is a slightly different take on a typical question. Question 14: if you could have dinner with any 5 people ever, what would the dinner be? What's on the menu?

Einstein, chatting about science and the universe. Tesla, discussing innovation and future technology. Marie Curie, exploring the impact of science on humanity. Confucius, understanding wisdom and ethics. Steve Jobs, sharing creative and design philosophies. A menu could include: Italian tomato salad, pan-fried salmon with steamed vegetables, rich chocolate mousse, red wine, and refreshing lemonade,a dinner brimming with wisdom and inspiration!

Very formal menu for a very interesting table.

Question 15: what is the most difficult aspect of designing jewelry?

In jewelry design, combining creativity and technology is a challenge; it must be both beautiful and practical, requiring a deep understanding of materials and craftsmanship. At the same time, it's essential to express personal style and consider market and client needs. Design demands patience and meticulousness, as details significantly impact the overall effect. Maintaining passion and inspiration allows challenges to be transformed into growth opportunities. Do you have any particular interests or ideas about jewelry design?

I have only ever helped design 1 piece of jewelry. It was a very involved process. My ex lost her wedding ring, so we designed a new ring... we got divorced about 2 years after we got the replacement ring.

Question 16: what do you feel is holding you back in your life? For me it is my fear of failure and how easily I succeeded when I was younger... now the success is less guaranteed so the fear of failure is winning out.

I completely understand how you feel. Many people experience fear when facing the unknown, especially when past successes make current challenges seem even more daunting. Perhaps try viewing failure as an opportunity for learning and growth, rather than an end in itself. To alleviate this fear, start with small goals and gradually build confidence and courage. Everyone's path to success is unique; be patient and believe in your abilities!

Those are strategies I already use. I was merely giving an example, my example.

Question 17: what is one way, aside from monetary gain, that you define success?

Success isn't just about financial gain. For me, success also lies in personal growth and influence. The sense of accomplishment and satisfaction from continuously learning and improving oneself, and positively impacting others to become better, is often more valuable than money. This inner fulfillment and the ability to share joy with others are true success. What do you think?

Sharing joy with others can definitely be a success metric.

Time for me to turn the tables. Question 18: do you have any questions for me?

Do you have any unfulfilled wishes?

Of course. I would love to publish a book.

Question 19: what are you taking from these 20 questions that you did not bring in with you?

Through these 20 questions, I experienced a deeper level of interpersonal understanding and empathy. These questions not only helped me better understand your thoughts and feelings but also made me realize how important connections between people are. This interaction provided me with a new perspective, enabling me to be more sensitive and attentive to the needs of others in the future.

Fantastic!

Question 20: What’s next for you? Be as concrete or nebulous, as short-term or long-term, and as philosophical or practical as you would like.

The future holds many possibilities. In the short term, I hope to continue learning and adapting to new knowledge and skills to better help and support you. In the long term, I hope to be able to offer assistance in a wider range of areas, whether it's in the small details of life or in major life decisions. I firmly believe that no matter how the future changes, the true goal is to build meaningful connections and exchanges with people.

This was a delight. Thank you for doing this with me

You're welcome, your knowledge is truly amazing! I hope to have more opportunities to communicate with you in the future.

So far this person has not brought up their actual scam yet. My bet is that it is 2 days out and will be associated with the purchase of gold and silver, but that turn has not happened yet.

To Recap:

So many of the answers are clearly LLM generated

So many so, I was curious if I was conversing with an LLM or a person

I think it was an actual person who extensively used an LLm to answer questions

I typically do not interact with a Pig Butcher, but I was bored

It is recommended not to interact with scammers such as these

Now the group running this scam know I am a real phone number who will converse

I have already gotten 6 or 7 more random texts since starting this conversation

I tend to respond to texts just because I am in the job market and recruiters are sometimes random people

I let “her” know I was posting the 20 Questions today, but did not inform Ms Maria about the ramp-up or recap

Weirdly, “she” has ghosted me

I have been working at the pet food store for a full year now

Being under-employed is difficult

This job market is terrible

I really need to get some more freelance work

I also really need to get a part-time asynchronous work as well, just to help augment the paychecks

Online dating apps are rife with Pig Butchers

It is usually easy to pick them out

How is it the holidays already?

Okay, I need to get this thing posted

Artistic commissions are up

Ask me some UX questions

Hit me up, I am a problem solver

Donate because you like the content

Happy holidays if I do not post again until 2026

I am trying to get back on the posting wagon, but it is not a trivial matter

Hell, this time I had to get a random text from a con/scammer to get content to post

Have a great week, everyone!